Comet Unveils Game-Changing Suite of Tools and Integrations to Accelerate Large Language Model Workflow for Data Scientists

Comet Unveils Game-Changing Suite of Tools and Integrations to Accelerate Large Language Model Workflow for Data Scientists

Company boosts productivity and performance with introduction of cutting-edge LLMOps capabilities

NEW YORK--(BUSINESS WIRE)--Comet, the leading platform for managing, visualizing and optimizing models from training runs to production monitoring, today announced a new suite of tools designed to revolutionize the workflow surrounding Large Language Models (LLMs). These tools mark the beginning of a new market category, known as LLMOps. With Comet's MLOps platform and cutting-edge LLMOps tools, organizations can effectively manage their LLMs and enhance their performance in a fraction of the time.

Comet’s new suite of tools debuts as data scientists working on NLP are no longer training their own models; rather, they’re spending days working to generate the right prompts (i.e. prompt engineering or prompt chaining in which data scientists create prompts based on the output of a previous prompt to solve more complex problems). However, data scientists haven’t had tools to sufficiently manage and analyze the performance of these prompts. Comet's offering enables them to embrace unparalleled levels of productivity and performance. Its tools address the evolving needs of the ML community to build production-ready LLMs and fill a gap in the market that – until now – has been neglected.

"Previously, data scientists required large amounts of data, significant GPU resources and months of work to train a model," commented Gideon Mendels, CEO and co-founder of Comet. "However, today, they can bring their models to production more rapidly than ever before. But the new LLM workflow necessitates dramatically different tools, and Comet's LLMOps capabilities were designed to address this crucial need. With our latest release, we believe that Comet offers a comprehensive solution to the challenges that have arisen with the use of Large Language Models."

Comet LLMOps Tools in Action

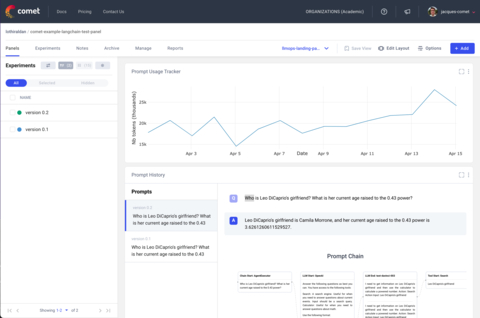

Comet’s LLMOps tools are designed to allow users to leverage the latest advancement in Prompt Management and query models in Comet to iterate quicker, identify performance bottlenecks and visualize the internal state of the Prompt Chains.

The new suite of tools serves three primary functions:

- Prompt Playground: Comet’s Prompt Playground allows Prompt Engineers to iterate quickly with different Prompt Templates and understand the impact on different contexts.

- Prompt History: This debugging tool keeps track of prompts, responses and chains to track experimentation and decision-making through chain visualization tools.

- Prompt Usage Tracker: Now teams can track usage at a project and experiment level to help understand prompt usage at a very granular level.

Integrations with leading Large Language Models and Libraries

Comet also announced integrations with OpenAI and LangChain, adding significant value to users. Comet’s integration with LangChain allows users to track, visualize and compare chains so they can iterate faster. The OpenAI integration empowers data scientists to leverage the full potential of OpenAI's GPT-3 and capture usage data and prompt / responses so that users never lose track of their past experiments.

"The goal of LangChain is to make it as easy as possible for developers to build language model applications. One of the biggest pain points we've heard is around keeping track of prompts and prompt completions," said Harrison Chase, Creator of LangChain. "That is why we're so excited about this integration with Comet, a platform for tracking and monitoring your machine learning experiments. With Comet, users can easily log their prompts, LLM outputs and compare different experiments to make decisions faster. This integration allows LangChain users to streamline their workflow and get the most out of their LLM development."

For more information on the new suite of tools and integrations, please visit Comet's website, http://comet.com/site/products/llmops.

About Comet

Comet provides the leading platform for managing Machine Learning models all the way from early experimentation to monitoring them in production. It provides tools for experiment tracking, artifact management, model registries, collaboration, visualization and supports integration with all of the popular ML frameworks. Comet's mission is to help Data Scientists and ML teams accelerate the development and deployment of ML models and help companies achieve business value from AI.

Contacts

Carmen Mantalas

carmen@gmkcommunications.com