Cerebras Systems Raises $1.1 Billion Series G at $8.1 Billion Valuation

Cerebras Systems Raises $1.1 Billion Series G at $8.1 Billion Valuation

Fidelity Management & Research Company Anchors Investment with an All-Star Consortium of Investors

SUNNYVALE, Calif.--(BUSINESS WIRE)--Cerebras Systems, makers of the fastest AI infrastructure in the industry, today announced the completion of an oversubscribed $1.1 billion Series G funding round at $8.1 billion post-money valuation. The round was led by Fidelity Management & Research Company and Atreides Management. The round included significant participation from Tiger Global, Valor Equity Partners, and 1789 Capital, as well as existing investors Altimeter, Alpha Wave, and Benchmark.

As the fastest inference provider in the world, Cerebras will use these funds to expand its pioneering technology portfolio with continued inventions in AI processor design, packaging, system design and AI supercomputers.

Share

As the fastest inference provider in the world, Cerebras will use these funds to expand its pioneering technology portfolio with continued inventions in AI processor design, packaging, system design and AI supercomputers. In addition, it will expand its U.S. manufacturing capacity and its U.S. data center capacity to keep pace with the explosive demand for Cerebras products and services.

"From our inception we have been backed by the most knowledgeable investors in the industry. They have seen the historic opportunity that is AI and have chosen to invest in Cerebras,” said Andrew Feldman, co-founder and CEO, Cerebras. "We are proud to expand our consortium of best-in-world investors.”

Inference Momentum as AI Industry Leaders Choose Cerebras

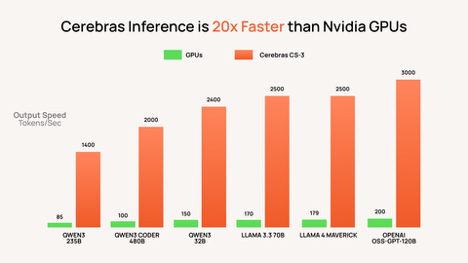

Cerebras has experienced extraordinary growth since launching its inference service in late 2024. Over the past year, Cerebras has held the performance crown every single day, routinely demonstrating speeds more than 20X faster than Nvidia GPUs on open-source and closed source models.

"Since our founding, we have tested every AI inference provider across hundreds of models. Cerebras is consistently the fastest,” said Micah Hill-Smith, CEO of leading benchmarking firm Artificial Analysis.

Cerebras’ performance advantage has led to massive demand. New real-time use cases – including code generation, reasoning, and agentic work – have increased the benefits from speed and the increased the cost of being slow, driving customers to Cerebras. Today, Cerebras is serving trillions of tokens per month, in its own cloud, on its customers premises, and across leading partner platforms.

In 2025, AI leaders including AWS, Meta, IBM, Mistral, Cognition, AlphaSense, Notion and hundreds more have chosen Cerebras, joining enterprises and governments, including GlaxoSmithKline, Mayo Clinic, the US Department of Energy, the US Department of Defense. Individual developers have also chosen Cerebras for their AI work. On Hugging Face, the leading AI hub for developers, Cerebras is the #1 inference provider with over 5 million monthly requests.

Citigroup and Barclays Capital acted as joint placement agents for the transaction.

About Cerebras Systems

Cerebras Systems builds the fastest AI infrastructure in the world. We are a team of pioneering computer architects, computer scientists, AI researchers, and engineers of all types. We have come together to make AI blisteringly fast through innovation and invention because we believe that when AI is fast it will change the world. Our flagship technology, the Wafer Scale Engine 3 (WSE-3) is the world’s largest and fastest AI processor. 56 times larger than the largest GPU, the WSE uses a fraction of the power per unit compute while delivering inference and training more than 20 times faster than the competition. Leading corporations, research institutes and governments on four continents chose Cerebras to run their AI workloads. Cerebras solutions are available on premise and in the cloud, for further information, visit cerebras.ai or follow us on LinkedIn, X and/or Threads.

Contacts

Media Contact

PR@zmcommunications.com