Responsible AI Institute Launches Responsible AI Safety and Effectiveness (RAISE) Benchmarks to Operationalize and Scale Responsible AI Policies

Responsible AI Institute Launches Responsible AI Safety and Effectiveness (RAISE) Benchmarks to Operationalize and Scale Responsible AI Policies

Three Benchmarks Aligned with NIST and ISO 42001 Address Corporate AI Policy, AI Hallucinations and Vendor Alignment

AUSTIN, Texas & NEW YORK--(BUSINESS WIRE)--Responsible AI Institute (RAI Institute), a prominent non-profit organization dedicated to facilitating the responsible use of AI worldwide, has introduced three essential tools known as the Responsible AI Safety and Effectiveness (RAISE) Benchmarks. These benchmarks are designed to assist companies in enhancing the integrity of their AI products, services and systems by integrating responsible AI principles into their development and deployment processes.

Against the backdrop of rapid developments in generative AI and growing regulatory oversight — exemplified by initiatives like President Biden's Executive Order, the European Union's AI Act, Canada's Artificial Intelligence and Data Act, and the recent UK AI Safety Summit where 28 nations signed an accord on developing safe AI — the RAISE Benchmarks take on a vital role in guiding organizations towards alignment with evolving global and local standards such as NIST AI Risk Management Framework (RMF) and the under-development ISO 42001 family of standards.

“In an era of accelerating AI advancements and increasing regulatory scrutiny, our RAISE Benchmarks provide organizations with the compass they need to chart a course of innovating and scaling AI responsibly, guiding them towards compliance with evolving global standards,” said Var Shankar, executive director of RAI Institute.

The RAISE Benchmarks announced today are independent, community developed tools that are accessible for RAI Institute members through the non-profit’s responsible AI testbed, announced earlier this year.

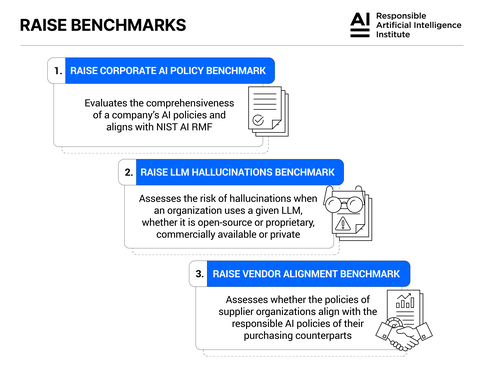

The initial series of RAISE Benchmarks being announced today serve three crucial purposes:

- RAISE Corporate AI Policy Benchmark: This benchmark evaluates the comprehensiveness of a company's AI policies by measuring their scope and alignment with RAI Institute's model enterprise AI policy which is based on NIST AI RMF. Today, RAI Institute is releasing the methodology, FAQs and an initial demo of the RAISE Corporate AI Policy Benchmark to guide organizations in framing their AI policies effectively to include new trustworthiness and risk considerations from generative AI and large language models (LLMs).

- RAISE LLM Hallucinations Benchmark: Organizations often grapple with mitigating AI hallucinations common in LLMs when creating new AI-powered products and solutions that result in unexpected, incorrect, misleading outputs. This benchmark helps organizations using LLMs, whether commercially available, open source or proprietary, assess the risk of hallucinations and take proactive measures to minimize them.

- RAISE Vendor Alignment Benchmark: This benchmark assesses whether the policies of supplier organizations align with the ethical and responsible AI policies of their purchasing counterparts. It ensures that vendors' AI practices harmonize with the values and expectations of the businesses they serve.

"Cultivating trust in AI is not a luxury, it's a necessity — one that requires diligence, flexibility and technical prowess. After leaving IBM Watson as general manager, I became intensely focused on creating an independent, community-driven non-profit to make responsible AI adoption achievable for enterprises at any level. I see this as my life’s mission. The RAISE Benchmarks are a testament to RAI Institute’s dedication to creating a more accountable AI ecosystem, where trust is sacrosanct,” said Manoj Saxena, founder and executive chairman of Responsible AI Institute.

The first three RAISE Benchmarks are currently available in private preview and will be generally available to RAI Institute Members in Q2 2024 after undergoing further refinement based on feedback from the community and businesses piloting the benchmarks throughout the first half of 2024. The RAISE Benchmarks will join the RAI Institute assessments, education modules and rigor in policy, regulation and standards to support members and the wider ecosystem.

The RAISE Benchmarks add unique value because they are vendor and technology agnostic, developed in a community-driven manner, applicable to both classic and generative AI, aligned with global AI standards, adaptable regionally, and improving continuously.

Quotes From Supporting Organizations

“As an organization that supports the development of the Corporate AI Policy Benchmark, we've witnessed firsthand how this initiative brings clarity and structure to the ever-evolving landscape of AI governance. This benchmark empowers organizations to craft an AI ethics code that not only meets industry standards but also aligns seamlessly with their unique values and goals to ensure beneficial customer outcomes. It's a game-changer for responsible AI adoption,” said Dr. Paul Dongha, group head of data and AI ethics at Lloyds Banking.

“As an AI-first company and healthcare payment integrity leader, we endorse the RAISE Benchmarks. They tackle critical AI trustworthiness and safety dimensions for us and our clients such as a corporate AI policy, AI hallucinations and vendor conformity, offering a roadmap to bolster accuracy, ethics, and adherence to NIST and ISO standards,” said Rajeev Ronanki, CEO of Lyric.

“Incredibly transformative sophistication has happened in development, deployment, governance and monitoring of AI applications in the past years, but with the explosion of evolutionary capabilities in the generative AI era, risk and strategy leaders foresee a compelling need for stronger AI standards benchmarking. A clear evidence of AI policies that are compliant with external, industry-wide, national or international standards like RAISE offers may be every risk leader’s dream come true, paramount for scaling strategically responsible adoption of AI,” said Saima Shafiq, senior vice president and head of applied AI transformation at PNC Bank.

How to Become a RAI Institute Member

RAI Institute invites new members to join in driving innovation and advancing responsible AI. Collaborating with esteemed organizations like those mentioned, RAI Institute develops practical approaches to mitigate AI-related risks and fosters the growth of responsible AI practices. Explore membership options here.

About Responsible AI Institute (RAI Institute)

Founded in 2016, Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. RAI Institute’s conformity assessments and certifications for AI systems support practitioners as they navigate the complex landscape of AI products. Members include ATB Financial, Amazon Web Services, Boston Consulting Group, Yum! Brands, Shell, Chevron, Roche and many other leading companies and institutions collaborate with RAI Institute to bring responsible AI to all industry sectors.

Contacts

Media Contacts

Audrey Briers

Bhava Communications for RAI Institute

rai@bhavacom.com

+1 (858) 522-0898

Nicole McCaffrey

Head of Marketing, RAI Institute

nicole@responsible.ai

+1 (440) 785-3588

Follow RAI Institute on Social Media

LinkedIn

X (formerly Twitter)

Slack