REDWOOD CITY, Calif.--(BUSINESS WIRE)--Numenta, Inc. announced it has achieved greater than 100x performance improvements on inference tasks in deep learning networks without any loss in accuracy. In a new white paper released today, Numenta detailed how it used sparse algorithms derived from its neuroscience research to achieve breakthrough performance in machine learning in a proof-of-concept demonstration.

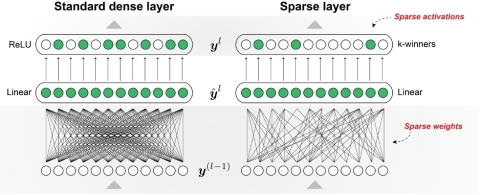

Sparsity offers new approaches for machine learning researchers to address performance bottlenecks as they scale to more complex tasks and bigger models. However, fully exploiting sparse networks can be difficult with today’s hardware limitations. As a result, most systems perform best with dense computations, and most sparse approaches today are restricted to narrow applications. Numenta’s sparse networks rely on key aspects of brain sparsity, most notably: activation sparsity (number of active neurons) and weight sparsity (interconnectedness of neurons). The combination of the two yields multiplicative effects, enabling large efficiency improvements.

In a new paper released today, Numenta demonstrates that it is possible to construct sparse networks that deliver equivalent accuracy to their dense counterparts, and can be fully leveraged by today’s hardware, delivering over two orders of magnitude performance improvement.

For the demonstration, Numenta used its algorithms on Xilinx Field Programmable Gate Array (FPGA)s and the Google Speech Commands (GSC) dataset. The demonstration included two off-the-shelf Xilinx FPGAs and Platforms: the Alveo™ U250 and the Zynq™ UltraScale+ ZU3EG. The Alveo U250 is a powerful platform designed for datacenters, while the Zynq class of FPGAs is much smaller and designed for embedded applications.

Numenta previously announced a 50x speed-up using a Sparse-Dense implementation, which takes full advantage of sparse weights but not sparse activations. For this demonstration, they added a ‘Sparse-Sparse’ implementation, which takes advantage of both. Specifics regarding these sparse implementations and more technical details can be found in the Numenta white paper here.

Specific benefits foreseen by the dramatic speed improvements enabled by Numenta’s sparse algorithms on machine learning include:

- Implementation of far larger networks using the same resource

- Implementation of more copies of networks on the same resource

- Implementation of more sophisticated sparse networks on edge platforms with limited resources where the corresponding dense networks do not fit

- Massive energy savings and lower costs due to scaling efficiencies

“Learning from neuroscience offers the only sure path to achieving real machine intelligence. These results validate this approach and give us confidence that we will see substantial additional progress in the years ahead,” said Jeff Hawkins, Numenta co-founder.

Beyond the greater efficiencies with extremely sparse networks, employing the principles of neuroscience can lead to dramatically improved training times, shrink the size of training sets, enable continual learning similar to humans–eliminating the need to constantly retrain the model—and, eventually providing several orders of magnitude improvements in scaling.

“This technology demonstration is just the beginning of a robust roadmap based on our extensive neuroscience research,” emphasized Subutai Ahmad, Numenta’s VP of Research and Engineering. “In the future, we expect to see additional benefits in generalization, robustness, and sensorimotor behavior.”

For information, contact the company at sparse@numenta.com.

About Numenta

Numenta scientists and engineers are working on one of humanity’s grand challenges: understanding how the brain works. The company has a dual mission: to reverse-engineer the neocortex and to enable machine intelligence technology based on cortical theory. Co-founded in 2005 by technologist and scientist Jeff Hawkins, Numenta has developed a theory of cortical function, called the Thousand Brains Theory of Intelligence. Hawkins’ new book, A Thousand Brains, details the discoveries that led to the theory and its potential impact on Artificial Intelligence. The team tests their theories via simulation, mathematical analysis, and academic and industry collaborations. Numenta publishes its progress in open access scientific journals, maintains an open source project, and licenses its intellectual property and technology for commercial purposes.

Numenta, Google, Xilinx, Alveo and Zynq are trademarks of their respective owners.